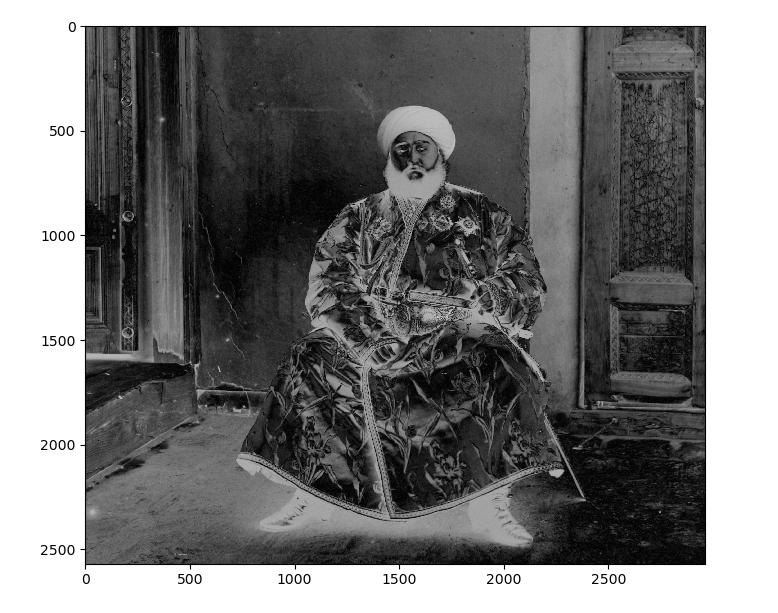

Sergei Mikhailovich Prokudin-Gorskii (1863-1944) [Сергей Михайлович Прокудин-Горский, to his Russian friends] was a man well ahead of his time. Convinced, as early as 1907, that color photography was the wave of the future, he won Tzar's special permission to travel across the vast Russian Empire and take color photographs of everything he saw including the only color portrait of Leo Tolstoy. And he really photographed everything: people, buildings, landscapes, railroads, bridges... thousands of color pictures! His idea was simple: record three exposures of every scene onto a glass plate using a red, a green, and a blue filter.

The goal of this project is to produce 1 color image given the 3 color filtered images.

The 3 color channels - R,G,B were extracted from the single glass plate image. This was done by dividing the height of the image into 3 equal parts and cropping the image as necessary.

There is a linear transformation needed to align the 3 color channels due to slight movements.

I used the Blue Channel as the base and aligned the Red and Green channels to it. I did a grid search of

± 15

pixels in the x-axis and y-axis.

I did a preprocessing step here to normalize each color channel.

I normalized by:

$$ {X - mean(X) / std(X)} $$

where X is the image matrix.

The metric I used for the alignment was the Sum of Squared Difference (SSD).

$$ {\sum_{x}\sum_{y}(ch1 - ch2)^2} $$

ch1 and ch2 are red/green and blue color channels respectively.

Once the translation vector for each color channel is known after the image aligment, that color channel is cropped and padded with 0s so that it retains is original dimension. The 3 color channels are then stacked along the third dimension and a single RGB image is obtained.

I would recursively scale down the image by a factor of 2 each time for an user defined n levels and perform grid search of 60/scale pixels in both the x and y axis.

I implmented 2d convolution that will convolve the image with any user defined filter. There is a fast

convolution solution only using numpy which worked better than my implementation using loops.

Using this filtering technique I managed to implement edge detection by convolving the image with the sobel

operator.

I used 2 matrices - G_x and G_y to calculate the gradient in both the x and y axis.

G_x is defined as:

\begin{bmatrix} -1 & 0 & 1 \\ -2 & 0 & 2 \\ -1 & 0 & 1 \end{bmatrix}

G_y is defined as:

\begin{bmatrix} 1 & 2 & 1 \\ 0 & 0 & 0 \\ -1 & 2 & -1 \end{bmatrix}

These matrices detect an edge along the x or y axis. Edge detection was particularly useful when the emir image

was not properly aligned without using the edge detector. The image generated from edge detector was then passed

to the alignment algorithm and it did much better. This was because the features were more prominent and thus

made it easier to match features.

A simple, yet effective technique was used. I subtracted each pixel in the image array by the global minimum pixel intensity and divided that by the maximum pixel intensity. This would create a matrix between 0.0 to 1.0 in floats. I then multiplied each pixel by 255 and casted them to unint8.

For all images, it is dynamically cropped. This is done through knowing the displacement of each channel and taking the maximum displacment for each channel on both the x and y axis.